What if you could understand the climate impact of a food product just by snapping a picture of it?

That’s the idea behind our recent PoC (Proof of Concept) project: making carbon footprint data more accessible, without adding friction to the consumer experience. Instead of expecting people to scan QR codes, search databases, or read through environmental reports, we explore how Agentic AI with Databricks can do the work, in the background.

In this article, we break down how we designed an end-to-end system using Databricks agents and Agentic AI patterns to estimate the CO₂ footprint of food products based on a single image. From structured nutrition tables to unstructured packaging visuals, we show how different types of AI agents can collaborate to handle real-world complexity, while keeping the user experience simple and smooth.

Use Case: Estimating the Climate Score of Food from a Single Picture

For consumers, it is difficult to take the carbon footprint of food items into account when shopping. This information is rarely visible, unlike nutrition scores, which are more standardized.

Also, estimating reliable carbon footprints for food products is a complex problem. This is because information in Life Cycle Assessment (LCA) databases is limited and fragmented. Estimating a reliable carbon footprint requires consistently allocating emissions across manufacturers, suppliers, recyclers, consumers, and waste handlers.

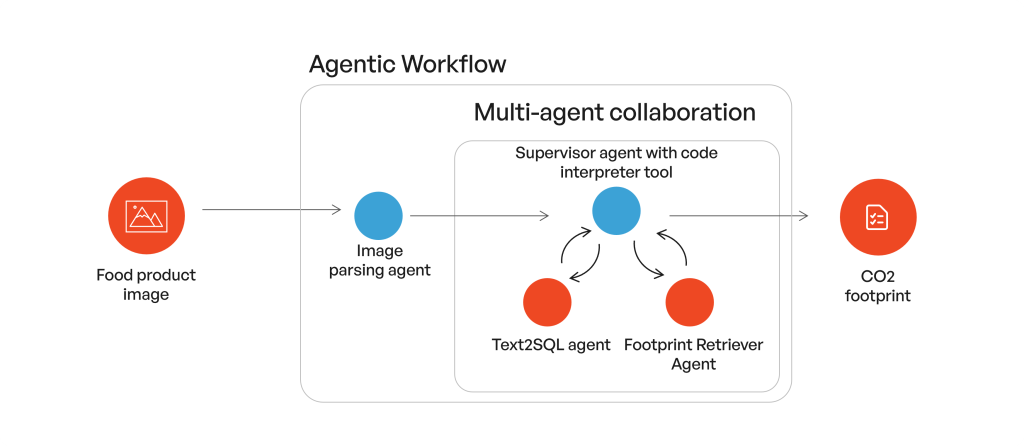

As a result, consumers would benefit from an Agentic AI system that can roughly estimate the carbon footprint of food items based on smartphone images or videos of product packaging or the food product itself (Figure 1).

Since a single Agent will struggle to solve this problem at two ends, it is a perfect opportunity to showcase different agentic design patterns tailored to the specific needs of sub-steps. The use case will also intuitively outline the motivation for multi-agent collaboration to provide an end-to-end task-solving interface to users so that users are empowered to make informed decisions.

Agentic AI Design Patterns

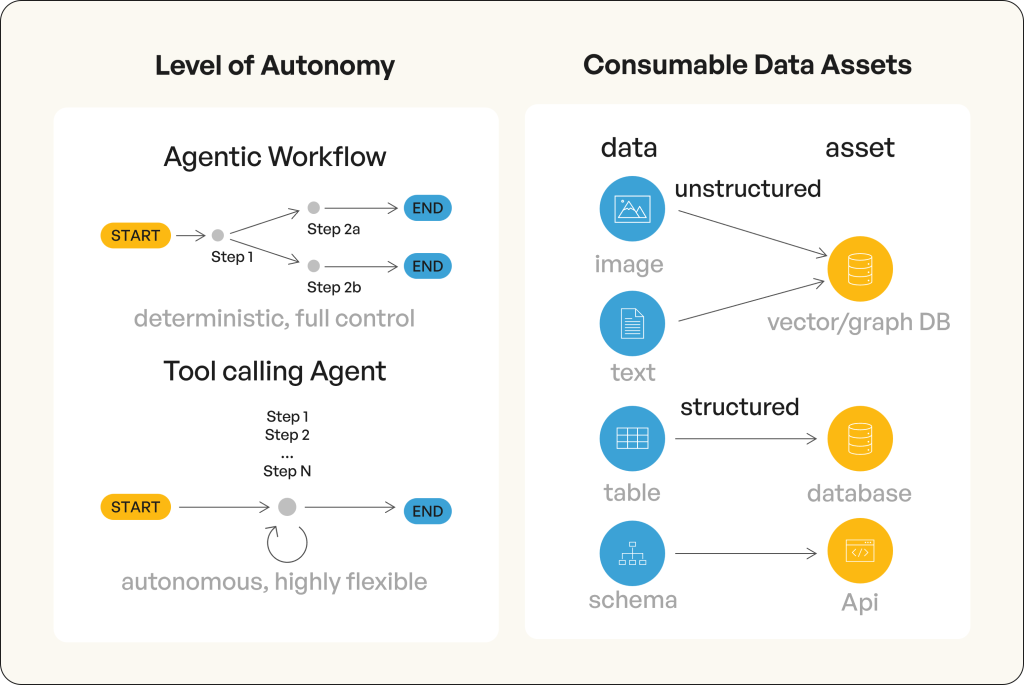

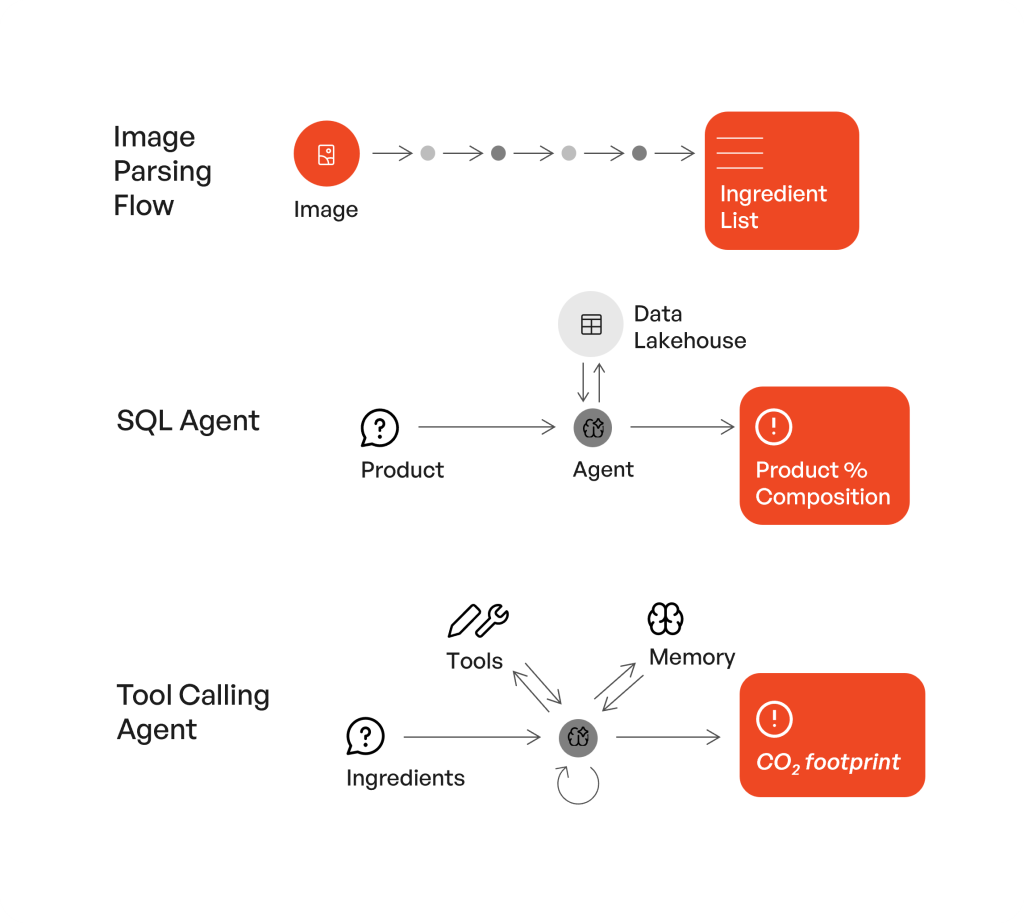

The choice of design pattern depends primarily on the level of autonomy required by the agent (Figure 2). With an agentic workflow, a sequence of LLM invocation steps with branching, parallelization, aggregation, and looping logic is predefined. This offers maximum control over the task with a defined sequence of steps and specified terminal states. Agentic workflows are well-suited for high-stake use cases where errors cannot be tolerated, and the sequence of steps must be fully controlled.

In these high-risk scenarios, unexpected behavior cannot be accepted or Use Cases where the required sequence of steps can be easily outlined. However, an Agentic Workflow has limited flexibility and adaptability. Autonomous tool-calling agents that are based on frameworks such as ReAct (iterations of reason, observe, react) are able to make independent decisions. They define the necessary steps to solve the task and call the required tools on their own.

You can add guardrails by requesting user approval for specific actions or prompting the agent to ask follow-up questions when uncertain. Under an ideal scenario, an autonomous agent can also self-reflect and self-correct intermediate states.

Another critical factor influencing the agent design is the type of data asset that needs to be consumed by the AI application to provide context to the agent to make intelligent data-driven decisions. Agents typically consume unstructured data objects such as images, text, audio, and heterogeneous documents from graph or vector databases using semantic search and graph traversal. In contrast, structured data such as APIs or relational databases are consumed via Model Context Protocol (MCP) servers and text-to-query transformations.

Solution Design for Carbon Footprint Estimation

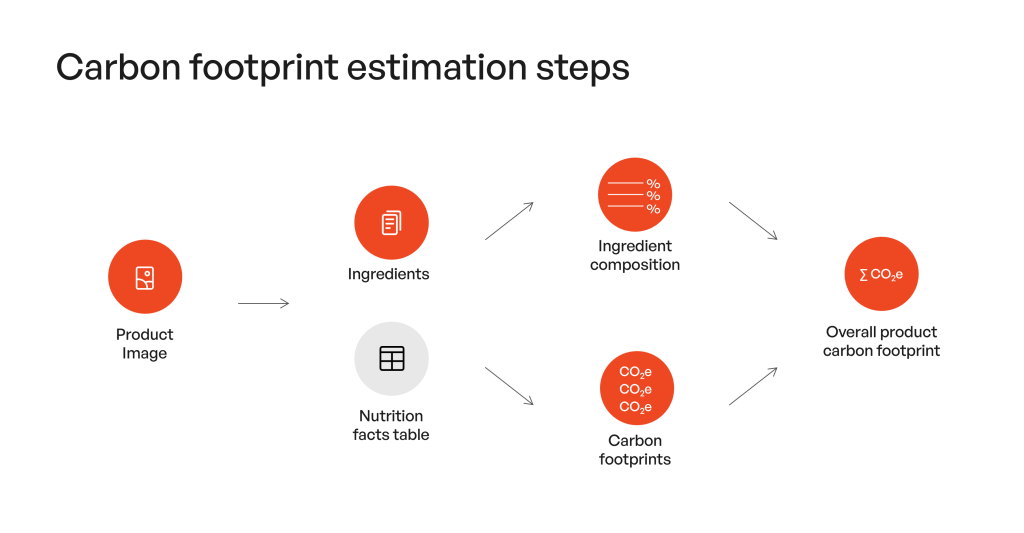

The carbon footprint estimation is broken down into three focused subtasks (Figure 3):

- Extract the ingredient information and nutrition facts table from the food product image.

- Extract the food product ingredient composition from proprietary inventory database tables. Or estimate ingredient composition by calculating mass contributions based on nutrition facts. For example, the percentage of sugar might be estimated based on the mass of carbohydrates per 100g product.

- Identify the CO2 footprint for each individual ingredient and compute an aggregated footprint based on the product’s percentage composition or the estimated composition according to the nutrition facts table.

This divide-and-conquer approach of CO2 footprint evaluation translates into 3 x types of AI agents (Figure 4):

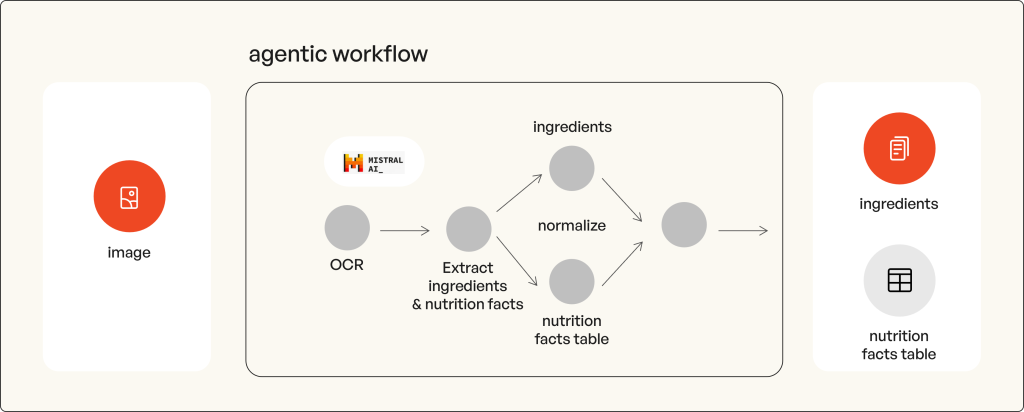

An image parsing agent uses OCR to extract the text from food product images, followed by parallel structuring of the extracted text for the ingredient list and the nutrition facts table.

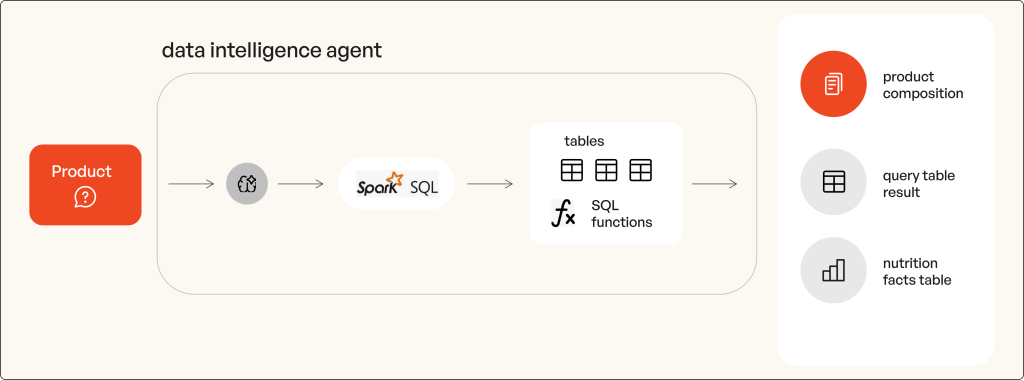

- Data intelligence text 2 SQL agent can identify information such as the %ingredient composition of food products.

- CO2 footprint assignment and calculation agent, which takes the list of ingredients and the product’s percentage composition and conducts a research phase for individual ingredients followed by an aggregation stage for the overall CO2 footprint computation.

Translate the AI Use-Case into Agentic AI Design patterns

Agent 1: Parsing Images to Extract Ingredients and Nutrition Facts

An Agentic LangGraph Workflow is highly suitable for extracting the ingredient list and nutrition facts table from the input image (Figure 5). The input and outputs are well-defined, and the different data target schemas can be extracted from the source image text in parallel. This design offers full control of the sequence of steps and uses Mistral OCR as a text extractor.

Agent 2: Querying Structured Data to Estimate Ingredient Composition

To extract insights from structured data sources using natural language, Text 2 SQL data intelligence agents are a good choice (Figure 6). Predefined SQL queries that LLMs can parametrize can also be a choice when less flexibility is needed. Databricks offers the seamless Genie agent solution, where data intelligence insights can be obtained via a natural language interface.

Genie Agents rely on semantically rich metadata descriptions of catalogues, schemata, tables, and columns and can also access business rules defined as SQL functions. For example, a dedicated SQL function defines an active contract for a specific business unit. The Genie agent can translate the user query into Spark SQL, execute the SQL query, and summarize the results as text, as a table, and as plots.

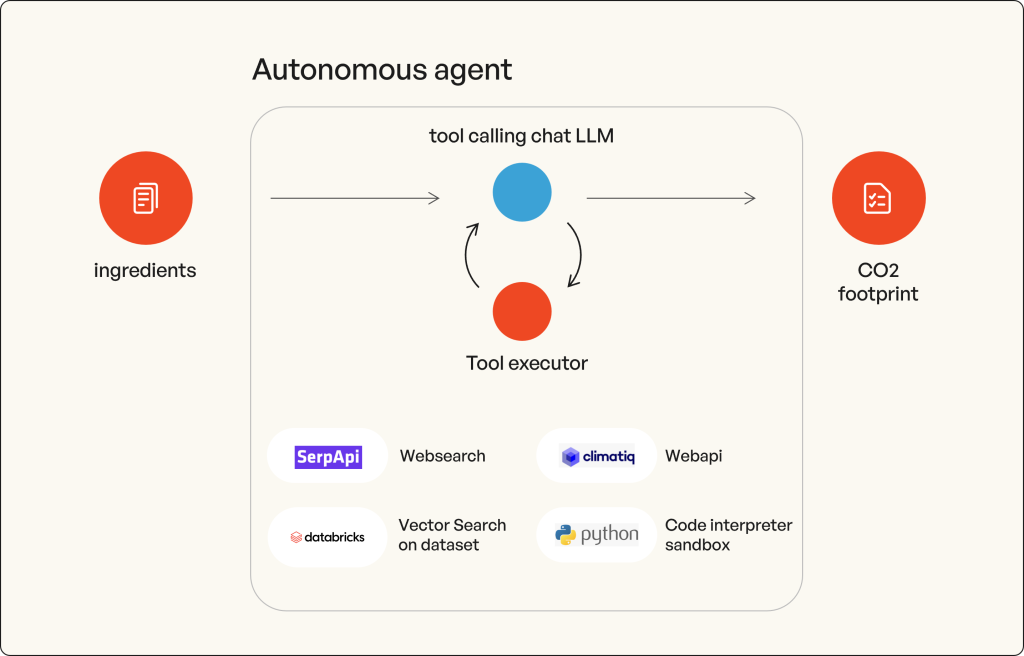

Agent 3: Calculating the Carbon Footprint with Autonomous Tool-Calling

To identify the carbon footprint for individual food product items, it is necessary to have access to various data sources such as:

- Vector Stores with CO2 footprint data for food products

- CO2 footprint estimation SaaS tool APIs like Climatic and Web search as a fallback (Figure 7).

It is essential that the agent can gracefully handle tool execution errors and can use different tools if no relevant footprint can be obtained from an individual tool. LLMs tend to perform math computations poorly. Consequently, the CO2 footprint estimator agent needs to have access either to a calculator tool or to a code executor sandbox tool to have the ability to perform exact computations. An autonomous tool calling agent is a good design choice for flexibly dealing with the selection of multiple tools and identifying the state when the data is ready and can be aggregated.

Orchestrating Multi-Agent Collaboration

End users should not have to navigate multiple agents or steps manually. Users wish for the job to be done based on an intuitive, user-friendly interface. This is why we chose to integrate task-specific agents into a coordinated, multi-agent application that works end to end. (Figure 8).

Our design choice combines an agentic workflow with a supervisor multi-agent. This translates into an Agentic Workflow in which the image parsing agent first extracts dietary information and ingredients from a food product image.

In the second step, a multi-agent supervisor retrieves carbon-footprint estimates and ingredient compositions from dedicated agents with access to structured data sources via Text2SQL, as well as retrieval and web search tools. The supervisor then aggregates the results using the code interpreter tool and returns the final estimate to the user.

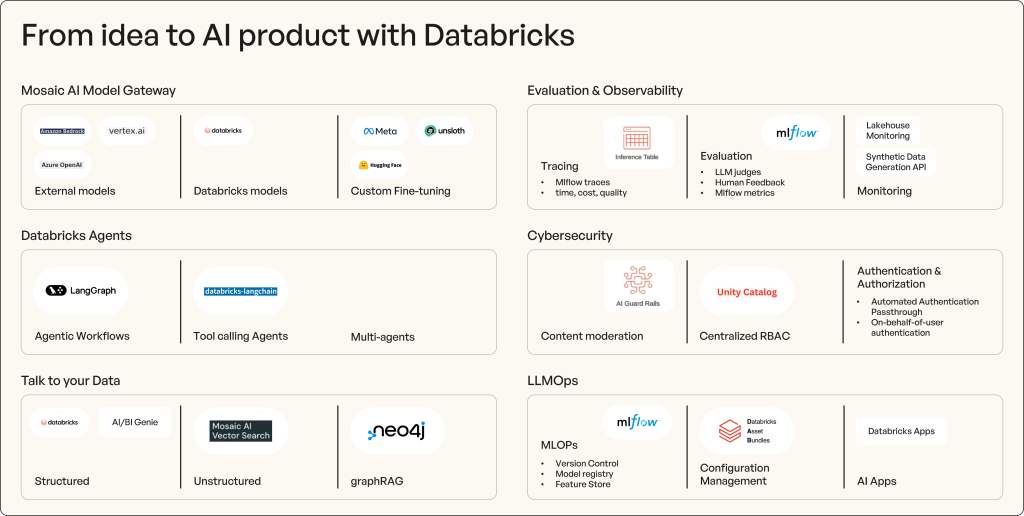

Databrick as a Seamless AI Agent Builder Platform

Databricks offers the right combination to seamlessly build, test, optimize, deploy, and monitor AI agents based on code (Figure 9) or via a newly announced no-code Agent Bricks interface (Data+AI Summit).

Features that make Databricks the right platform for building, optimizing, and deploying AI Agents:

- LLMs, both external (Anthropic Claude models, OpenAI GPT models, Google Gemini models, Azure OpenAI, …), Databricks models (DBRX, …), Hugging Face models, and custom fine-tuned models can be accessed via an unified mosaic AI LLM gateway. Mosaic AI Gateway offers a unified interface across all models, making it easier to monitor performance and usage metrics like token consumption or latency.

- Databricks Agents is fully compatible with popular agentic AI frameworks such as LangGraph and Langchain and supports predictions ± streaming.

- Databricks Agents offer solutions to seamlessly interface with structured relational, tabular data via Genie spaces and with unstructured image and textual data via Mosaic AI Vector search. Additionally, it is possible to enable graph RAG by utilizing Neo4J graph databases.

- Databricks natively bring the complete set of observability, evaluation, and monitoring capabilities. Mlflow enables detailed agent tracing to analyze which tool was used with which inputs and outputs, the inference time and token consumption for each step, and which tool executions failed. Mosaic synthetic data generation API enables the generation of end-to-end test sets based on human examples to evaluate AI agents with well-established GenAI (Generative AI) evaluation metrics and LLM judge metrics based on the test sets.

- Importantly, mosaic AI gateway models can be set up to use configurable AI guardrails based on models and rules that apply to every incoming and LLM output event. The Guardrail events are logged as metadata in the traces. Unity Catalog provides central and unified role-based access control to all Databricks assets, including models, agents, dashboards, tables, and indices. Authentication and Authorization are supported for agents via Automated Authentication Passthrough and on-behalf-of-user authentication.

- Databricks also functions as a full MLOps platform, offering version control, model registry, experiment tracking, and deployment tools through Databricks Asset Bundles. AI Apps can be seamlessly deployed using Databricks Apps.

Databrick combined with Agentic AI for climate-conscious decisions

This project shows what agentic AI can do when applied with purpose: simplify complex tasks and surface insights that matter. In just two weeks, we moved from raw data to a testable application capable of estimating food-related CO₂ footprints using both structured and unstructured inputs.

Each agent was designed for a specific task—extracting product data, querying ingredient databases, calculating emissions—and brought together into a unified, multi-agent workflow. Thanks to the Databricks platform, we were able to go from concept to deployment with orchestration, observability, and evaluation tools already in place.

Looking ahead, agentic AI could take on more advanced carbon footprint models from the world of LCA, including methodologies like EN15804 or allocation by substitution. These standardized approaches involve repetitive, data-heavy steps that are ideal for automation, especially when combined with human oversight.

With the right platform and thoughtful design, AI agents can help turn these frameworks into everyday tools. That means giving consumers the ability to make more informed, climate-conscious decisions—starting with something as simple as a picture of a product.