In recent years, Responsible AI (RAI) has moved to the forefront of priorities for financial institutions. This shift accelerated in 2022 as the EU AI Act gained momentum, raising concerns around compliance, data governance, and public trust. As the first comprehensive European regulation for artificial intelligence, the EU AI Act establishes clear rules for developing, deploying, and using AI systems, based on their potential risk to health, safety, and fundamental rights.

Key challenge

A major European bank approached us to understand whether—and how—its AI initiatives complied with the regulatory requirements of the EU AI Act.

Our engagement focused on three priorities: assessing the organization’s Responsible AI maturity, identifying use cases that could be classified as high-risk, and defining a practical roadmap to embed compliance across teams. Beyond governance and technical controls, the EU AI Act emphasizes the human factor: operators of high-risk AI systems, including employees and contractors, must possess sufficient AI literacy. We had to ensure that they understood the system behavior and could make informed, accountable decisions when interacting with AI.

Our approach

Our mission was to first determine if its use cases fell into the high-risk category defined by the EU AI Act, then evaluate the organization’s RAI maturity, and finally to turn the gap analysis into hands-on decisions and next actions.

To achieve this, we started to apply the Act’s risk-based assessment framework across all identified use cases. Because Responsible AI is highly context-specific and difficult to evaluate in a generic way, especially when standardized guidelines for large organizations are lacking, each project required an individual review, or at least a department-level evaluation.

Our approach included two main steps.

Step 1: RAI Maturity Score Report

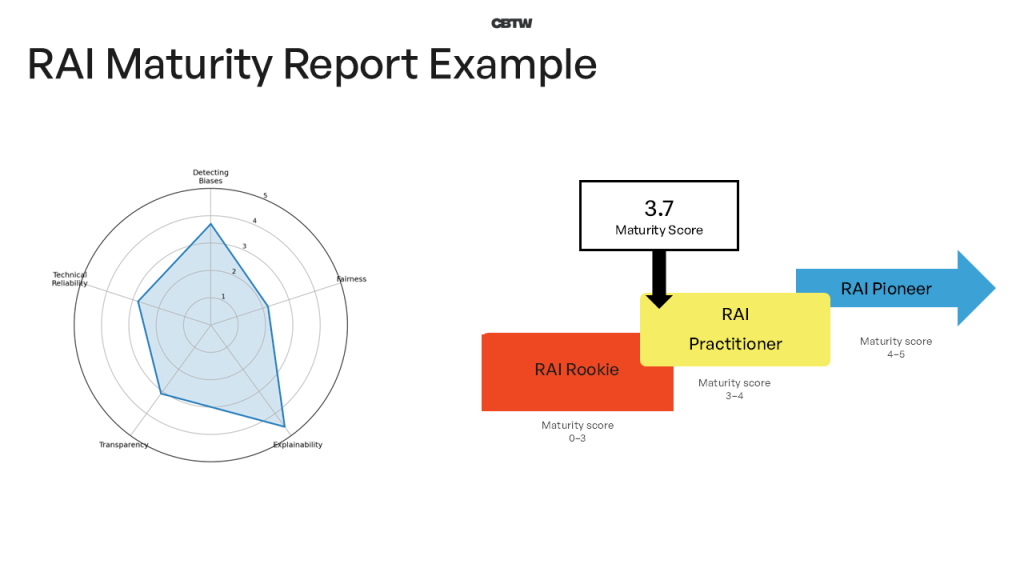

To evaluate the bank’s Responsible AI maturity, we began by conducting a questionnaire in collaboration with the client. The assessment comprised 28 questions, organized around three key areas:

- Bias and fairness

- Explainability and transparency

- Technical reliability.

For each question, the client was asked to provide a score between 1 and 5. For instance, sample questions included:

- “Is the use of protected attributes (e.g., gender, age) as features regulated, documented, and reviewed to prevent discrimination?” (Bias and fairness)

- “Are there applications or frameworks that help end-users understand the decisions made by AI systems?” (Explainability and transparency)

Based on the responses, we developed a comprehensive maturity score report. This report served as a shared reference point for a gap analysis, highlighting where current practices diverged from EU AI Act mandated standards.

Step 2: Implementation and Remediation

Once the gap analysis revealed where current practices didn’t fully match EU AI Act expectations, the team ran a focused on-site workshop. This working session turned the gap analysis into hands-on decisions and next actions, aligning stakeholders on two main questions:

- Which AI system genuinely qualify as high-risk under the EU AI Act?

- What enhancements are required to meet regulatory expectations?

For each project, participants reviewed whether these aspects had been considered and how they were currently addressed. To ground these principles in reality, the client brought forward actual use cases from past projects. This enabled practical discussions, fostered a common language around Responsible AI and ensured alignment across teams.

Concrete actions were recommended based on identified risks and edge cases were explored to define mitigation strategies. While this assessment focused on targeted dimensions (Bias and fairness, Explainability and transparency, Technical reliability), a comprehensive RAI maturity evaluation should also encompass additional critical areas such as data governance and quality, as well as sustainability and ethics.

Finally, we delivered a final presentation and organized a dialogue session to confirm findings, lock in the key takeaways, and align on the next steps for compliance and governance—backed by a written report detailing the assessments, core findings, major risks, best practices, and agreed actions.

Key benefits

The assessment clarified which use cases were most likely to be classified as high-risk and helped the bank prioritize improvements to avoid failing compliance.

Main benefits included:

- Focused prioritization: Time and resources were directed toward areas with the highest impact through a targeted approach.

- Navigating the EU AI Act: Clarification of the requirements that apply to high-risk AI systems.

- Shared baseline across teams: Maturity score for each assessed dimension, providing a common reference point.

- Actionable recommendations: Practical guidance for both mature projects as well as early-stage initiatives, with the greatest value for technically stable projects undergoing detailed Responsible AI reviews.

- Stronger forward monitoring: Improved visibility into key compliance indicators for upcoming AI projects.

- Consistent practices: Enhanced alignment across departments and AI initiatives.

- Lasting knowledge: Increased RAI awareness and education, fostering gradual cultural change.

Beyond immediate compliance, the assessment triggered ongoing discussions about priorities and governance. It also reinforced that Responsible AI is an iterative effort—one that benefits from continuous refinement of tools, processes, and skills across the organization.